Weaponized Tweets: AI Could Help Defend Against Adversary Attacks in Social Media

This article was submitted to War on the Rocks in response to the call for ideas issued by the co-chairs of the National Security Commission on Artificial Intelligence, Eric Schmidt and Robert Work. It addresses the first question, which asks authors to a) consider how AI will impact strategic rivalry, including competition below the threshold of armed conflict, and b) discuss what has already happened when the United States failed to develop robust AI capabilities to address a national security issue.

Social media is the new battleground for information warfare — and artificial intelligence provides weapons and shields in this fight. This is a story of how artificial intelligence could have helped defend America against adversarial attacks on Twitter in the run-up to the 2016 presidential election, allowing a human intelligence analyst to easily track changes in the adversary’s online tactics over time.

The Russian-backed Internet Research Agency was tweeting as early as 2012. Initially, these state-sponsored Russian trolls focused their attacks on domestic Russian issues, only later turning to foreign topics such as Ukraine, Syria, and eventually the United States. The United States did not respond until after its presidential election in November 2016, when the U.S. Department of Justice indicted key figures in the Internet Research Agency for fraud, particularly in regard to their social media activities. Twitter suspended all accounts likely belonging to this organization. The U.S. Congress commissioned two independent studies, culminating in a bipartisan Senate Select Committee on Intelligence report finding that the Russian government engaged in an aggressive, multifaceted effort to influence the outcome of the 2016 presidential election. Beginning several years before the 2016 election, Russian operatives employed by the Internet Research Agency in St. Petersburg masqueraded as Americans online, using targeted advertisements, fake news, and their own social media posts to deceive and sow discord among tens of millions of American social media users.

Russia’s social media activities continue to pose threats today as U.S. citizens cast ballots in the 2020 election, people around the world protest against systemic racism, and world governments attempt to protect their citizens from the novel coronavirus. To defend against similar information warfare campaigns in the 2020s, the U.S. government should now properly resource and task experts to build the interactive dashboard systems needed by intelligence analysts to track adversaries’ tactics over time. While similar techniques could be used in industry to track the tactics of online trolls in commercial situations, we consider here a national security application.

Avoiding the Monday-Morning Quarterback

How could the U.S. government have thwarted a social media attack in the run-up to the 2016 election? How far in advance could any indications and warnings have been revealed? To explore these questions, we considered a hypothetical scenario in which, several years before the election, a U.S. intelligence organization receives a high-confidence tip that an adversary was clandestinely posting tweets on social media. In this scenario, a trusted source even flags the particular Twitter accounts — those that, years later, Twitter eventually attributed to the Internet Research Agency. Envisioning this scenario, we created a prototype system using open-source software tools that could have helped a U.S. intelligence analyst monitor those flagged Twitter accounts. This system could have helped that analyst practice her tradecraft — observing at a massive scale how the adversary’s tactics changed over time, a key to inferring the adversary’s underlying strategy in real time.

We developed a software pipeline for curating, processing, and analyzing adversary tweets using a well-known artificial intelligence technique called Latent Dirichlet Allocation. This algorithm automatically organizes the words in the tweets into underlying topics. We downloaded and processed through the algorithm a publicly available dataset of approximately 3 million tweets posted by nearly 3,000 Internet Research Agency accounts between February 2012 and May 2018. The U.S. intelligence analyst in our scenario could have then labeled the topics output by the algorithm to identify the issues that Russia was promoting on Twitter. For example, the analyst could have used the system to identify specific topics involving news, sports, politics, and race relations.

As it turns out, Russia’s tactics evolved over time, from coarse to more refined. We uncovered multiple distinct tactical phases of the Russian attack, all of which a U.S. intelligence analyst could have identified with only one month of lag time. The Russian trolls’ English tweet topics grew more specific, more negative, and more polarizing over time, with the final pattern solidifying in late 2015, one year before the 2016 election.

2012 – 2014: Few Internet Research Agency tweets, with even fewer in English.

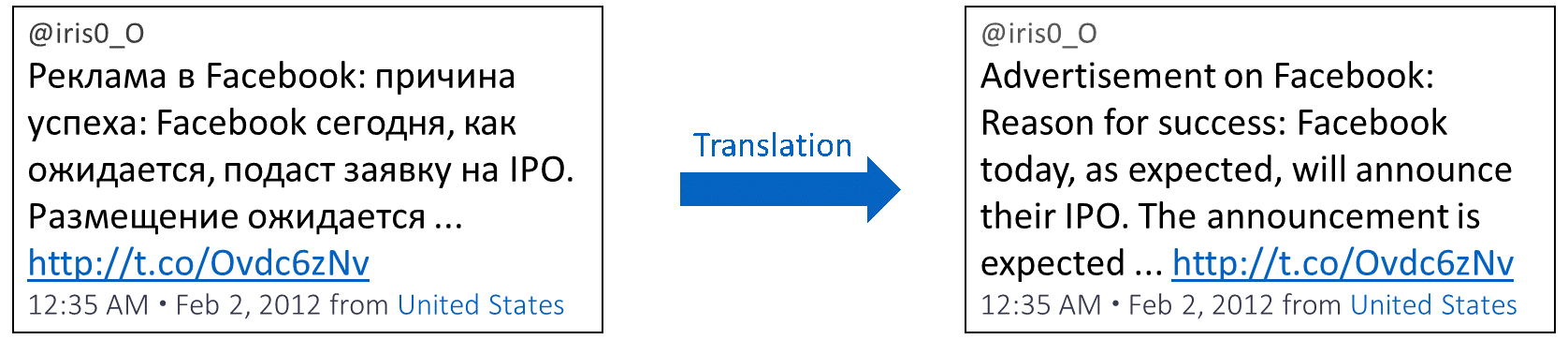

Back in the early years, only a few dozen Internet Research Agency accounts were active, each posting only a few tweets each month. Most tweets were in Russian; few were in English. Automated software tools could have identified the most prominent languages of the flagged accounts. Glancing through tweet after tweet of Cyrillic characters, an intelligence analyst could have quickly surmised that most tweets weren’t meant for an English-speaking American audience. She could have passed off these tweets to the appropriate language or regional experts and settled into a sit-and-watch mode.

Figure 1: An Early Internet Research Agency Tweet, February 2012.

Early 2015: Significantly more Internet Research Agency tweets, most in English. Topics were loose, vague, and both positive and negative.

Things changed in late 2014 and early 2015. The Russian trolls began tweeting much more frequently, by over two orders of magnitude. By January 2015, English had become the most prominent language of the Internet Research Agency. A U.S. intelligence analyst would have sat up and noticed. Why are the flagged accounts posting so frequently and so heavily in English? Are the Russians now targeting Americans? The analyst would not have been able to read through the tens of thousands of flagged tweets. Instead, she could have let her computer do the reading for her, with the artificial intelligence system supporting her workflow by automatically organizing the main topics of the tweets for her to label, thus providing a semiautomated change detection on the Russians’ observable tactics.

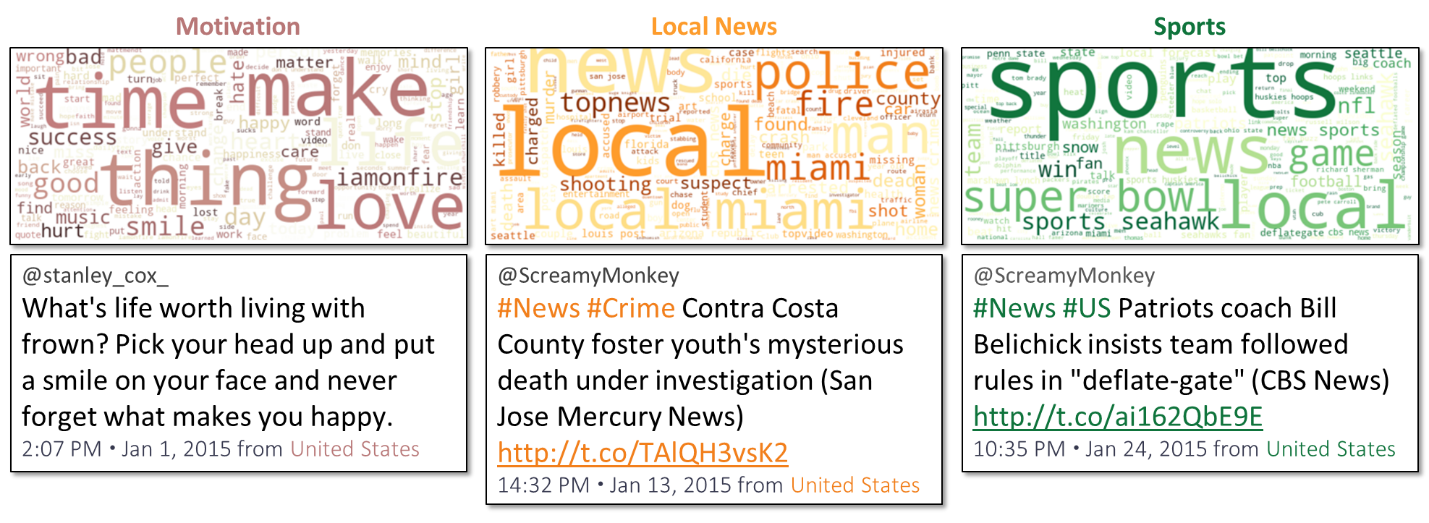

In January 2015, many of the Russian trolls’ English tweets used words like make, love, time, thing, success, give, and smile — loose, vague, and positive terms that an analyst could have categorized as “Motivation.” Also, many tweets used words like news, local, police, fire, killed, and so on — similarly loose and vague but negative terms that could have been labeled “Local News.” And many tweets used words like sports, NFL, super, bowl, and game — a “Sports” topic.

Figure 2: Three Internet Research Agency Topics With Representative Tweets, January 2015.

Armed with this information, the analyst could have reported that the adversary’s tactics had changed: They were suddenly posting much more frequently, in English, about loose, vague topics with both positive and negative affect — motivational messages, local news, and sports. By objectively aggregating a very large number of tweets, the artificial intelligence system could have allowed the analyst to provide this high-confidence assessment of the Russians’ observed tactics. However, inferring the Russians’ strategy based on those observed tactics would have still been a more difficult task in real time.

Summer 2015: Even more Internet Research Agency tweets, most still in English. Tweets dominated by a single cultural topic and interspersed with news, some political in nature.

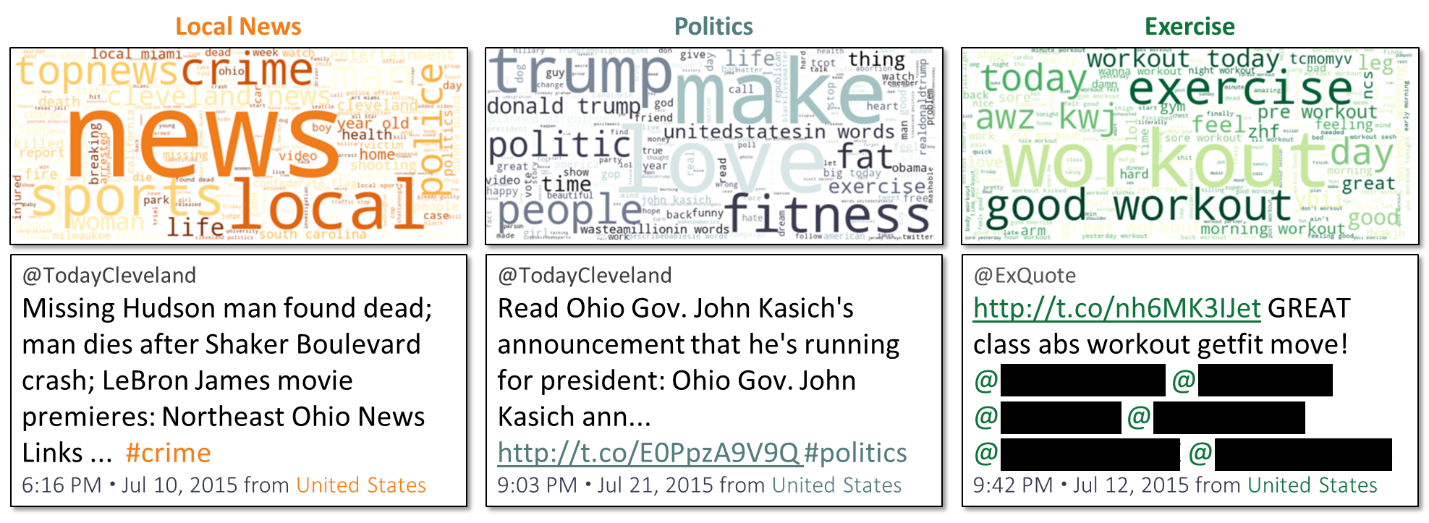

The situation changed again a few months later. In July 2015, the number of Russian troll tweets shot up by another order of magnitude. The percentage in English remained high. Some English tweets were associated with the same “Local News” topic from January 2015. Another topic category consisted of a hodgepodge of words, some political in nature. And a large number of tweets were associated with a new topic: “Exercise.” The Internet Research Agency may have used these “Exercise” tweets to pique interest from other Twitter users and garner a following; in fact, many “Exercise” tweets tagged the handles of other Twitter accounts, blacked out for privacy below:

Figure 3: Three Internet Research Agency Topics with Representative Tweets, July 2015.

The analyst could have reported that the Russians had changed their tactics again: from loose, vague topics in early 2015 to a single cultural topic (exercise) interspersed with news and some politics in the summer of 2015. However, the strategy behind this latest set of tactics may have still remained unclear.

Late 2015 and on: Most Internet Research Agency tweets still in English. Topics were more specific, more varied, and more polarizing.

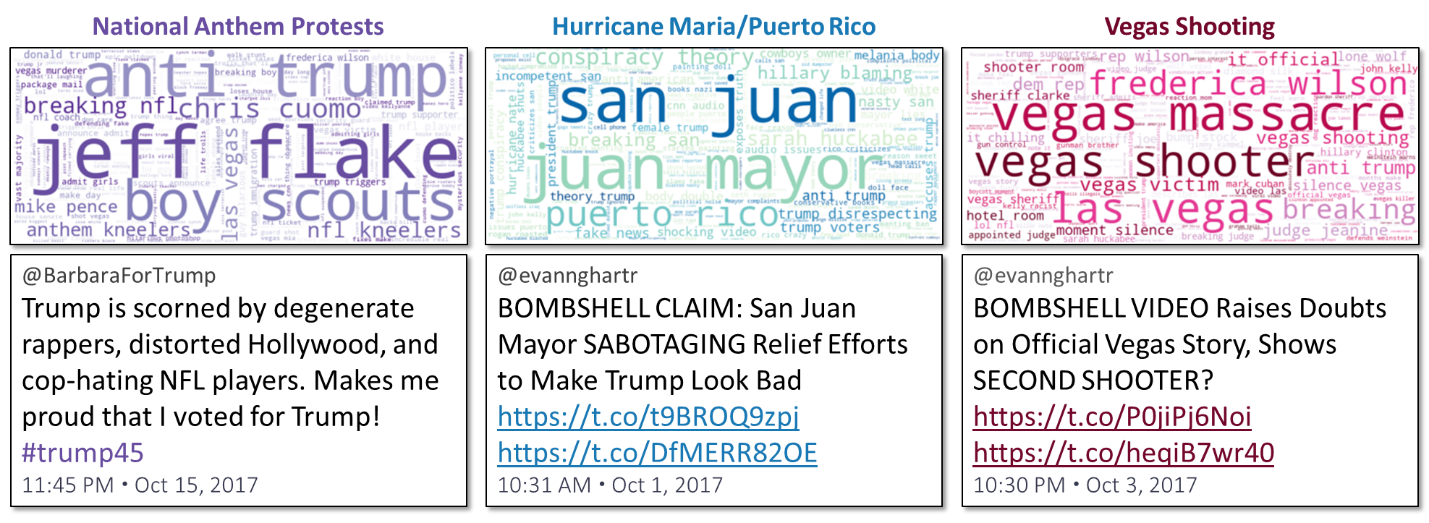

Fast-forward to the fall of 2015. The Russians’ topic pattern changed again — topics were now more specific, more varied, and more polarizing. Many tweets were still associated with the “Local News” and “Politics” topics. By now, though, many “Politics” tweets had adopted a more polarizing tone. Other topics began to emerge, including “Guns” and “Terrorism.”

Figure 4: Three Internet Research Agency Topics with Representative Tweets, December 2015.

The fall of 2015 (one year before the 2016 election) was the earliest point in time in which our artificial intelligence-powered indications and warnings could have helped reveal Russia’s strategy to sow discord in the English-speaking world. This result would not have been a surprise — the Russians have wielded active measures against the United States and its allies since the Cold War.

The Russians’ overall tactics remained largely unchanged after that. In October 2017 (one year after the election), their English tweet topics were still specific and even more negative and polarizing. Topic labels now included “National Anthem Protests,” “Hurricane Maria/Puerto Rico,” and “Vegas Shooting”:

Figure 5: Three Internet Research Agency Topics with Representative Tweets, October 2017.

Connecting the Dots: From Past to Present to Future

Artificial intelligence applications like the topic-sorting software tool we discussed above could have helped analysts track changes in the Russians’ observable tactics over time, thus providing early indications and warnings of their intent. Moving forward, how could the U.S. intelligence community rapidly and efficiently repeat this type of analysis in 2020 and beyond?

We envision a dashboard system for decision support. We anticipate situations in which a U.S. intelligence analyst is tasked with monitoring all tweets posted by thousands of accounts already attributed to foreign adversaries. The analyst could pose a series of questions and use the dashboard system to find answers.

For example, starting with all tweets posted by the flagged adversary accounts, the analyst could query, “Show me all tweets that were posted in the last month, in the German language, from a supposed German location, and between 0200 and 0500 Central European Time,” thinking that this is the period of night when most real Germans are asleep. The analyst could filter down further with a second query such as, “Show me all tweets that were related to the topic labels ‘Coronavirus’ or ‘Social Distancing.’” If the analyst found a particularly interesting tweet, she could drill down even further, querying, “Show me all tweets like this one …” indicating that she wished to see all tweets associated with a similar combination of topics, “… from March 2020,” remembering that this was the month in which Germany first imposed social distancing restrictions. Of these tweets, the analyst could then query, “Show me all tweets with the hashtag #Wuhan,” and so on.

This human-driven, drill-down approach could allow the analyst to connect the dots between adversaries’ tactics and strategies. Like in the query example we pose above, the analyst could use the dashboard to explore hypotheses about how adversaries sowed discord within NATO allies about the origin of the novel coronavirus during the early days of the pandemic.

Whodunit?

Of course, to infer the adversary’s strategy, one must first tackle the problem of attribution — identifying the adversary accounts in the first place. We did not create a “Russian troll detector” as part of our analysis. Instead, we relied upon Twitter’s attributions. Developing a “troll detector” is a difficult task. Trolls and bots are not unique to Russia. America has trolls of its own. Twitter is awash with angry or simply mischievous users of all nationalities who just like to stir the pot. Social media companies have proprietary methods for identifying accounts operated by clandestine organizations like the Internet Research Agency — but Twitter does not fully disclose its attribution methods to the public. Twitter itself has admitted that it has not always gotten it right. In 2019, Twitter realized that several thousand tweets it had originally attributed to the Internet Research Agency were actually posted by a similar organization in Venezuela. Still, social media platforms have expertise in tweet attribution. Should the U.S. government let the private sector lead the way in attribution and focus its manpower on the analysis of already-attributed tweets instead? There are pros and cons either way.

Rising to the Challenge

Regardless of who performs the attribution, the good news is that the U.S. intelligence community now knows what it’s up against. Fortunately, the United States already possesses the three key areas of expertise needed to address the threat of social media information operations — science and technology, geopolitics and foreign language, and social science. From a technical perspective, we used artificial intelligence techniques to track changes in Russia’s tactics on a monthly resolution. Moving forward, adversaries’ tactics may change much more frequently, on a weekly or even daily basis. More complex artificial intelligence techniques could potentially model the statistical correlation between tweets over time, automatically tracking which tweet topics continue from month to month, without as much toil and trouble from the analyst. Of course, an artificial intelligence system can’t do the entire job by itself — the human analyst is still needed, with human expertise in geopolitics and foreign language. The tweet language (English, Russian, German, Ukrainian) and supposed region from where the tweet was posted (United States, Russian Federation, Azerbaijan, United Arab Emirates) must also be considered. Although the Internet Research Agency trolls used virtual private networks to mask the true locations from where they tweeted, their spoofed locations reveal from where they wanted their audience to think their tweets originated, providing insight into what regions of the world they were targeting with their tweets. Such insight could help fill in the missing link between observing their tactics and inferring their strategies — a step that is hard for a machine to do on its own.

Last but not least, the U.S. government should not forget the “social” in “social media.” Social science expertise can not only help the human analyst make sense of the answers her system spits out in response to her queries but can also help her formulate her queries in the first place, all in the context of a solid understanding of the relevant geopolitics and language quirks. America’s expertise in all of these areas can pave the way for using artificial intelligence for dynamic information warfare just in time to meet the challenges of the 2020s.

Dr. Shelley Cazares, Emily Parrish, and Dr. Jenny Holzer are research scientists at the Institute for Defense Analyses in Alexandria, Virginia, a nonprofit corporation operating three federally funded research and development centers. Cazares earned her B.S. in electrical engineering and computer science from MIT and Ph.D. in engineering science as a Marshall Scholar in the University of Oxford’s signal processing and neural networks research laboratory. Parrish graduated from the College of William and Mary with a B.S. in chemistry and is currently earning her M.S. in data analytics at The George Washington University. Holzer earned her B.S. in physics at Ohio University and Ph.D. in physics at the University of Cincinnati.

Image: Darron Birgenheier