Wrong does not cease to be wrong because the majority share in it.

I once overheard two Air Force signals analysts fiercely debating whether a medium-range, single-role, jet bomber was training to employ rockets or bombs. (The correct answer is that bombers drop bombs.) These analysts were green but also bright and industrious, yet they still spent the better part of an hour debating. Why would two professional military analysts disagree on such a basic question? As I saw it, they lacked a contextualized understanding of the subject of their analysis. Instead, they leaned on the intelligence discipline in which they were specialized. They were signals analysts, and no matter how many times they looked at the signals, they still had, essentially, a fill-in-the-blank word problem: A medium-range, single-role, jet bomber was training to employ __________ (rockets / bombs). Eventually, a senior analyst came to the rescue. Turns out, the bomber was training to employ … bombs.

As incredible as it sounds, episodes like this play out time and again in Air Force intelligence missions. Why could the senior analyst solve this when the young analysts could not? Does one need over 20 years of professional experience to know that bombers train to employ bombs? Certainly not. The issue is not experience — it is behavior.

Air Force learning policy develops the wrong behaviors in its intelligence analysts. Operations require analytic thinkers, but current learning pipelines only produce task-based thinkers — checklist thinkers. It makes tribes of intelligence disciplines by training workers in specific tools, software suites, and working aids (e.g., geospatial analyst, signals analyst, fusion analyst, language analyst, etc.). The Air Force needs analysts. Perhaps most importantly, in Air Force intelligence, there is no common, practical definition for what an analyst or analysis is. The Air Force will face an increasingly complex set of challenges in the years ahead as the country competes with China and Russia. As a result, Air Force intelligence should focus less on training, and more on educating its analysts in their roles as advocates, skeptics, and researchers.

Air Force Learning Policy Needs to Adapt

The Air Force’s one-size-fits-all learning policy is over-systematized. In trying to prescribe a method to efficiently satisfy the entire force’s learning needs, the learning policy is so myopic and specialized that it can only handle very few types of inputs. The learning policy cannot discriminate between the hugely different levels of complexity that exist in real-world learning requirements, behavior assessments, even the students and instructors themselves. It forces adaptation to the system by assuming the inputs meet its unimaginative, low-bar specifications. This is different from a system that adapts to new challenges. The result is less a hammer seeing everything as nails and more a woodchipper turning all species of trees to sawdust.

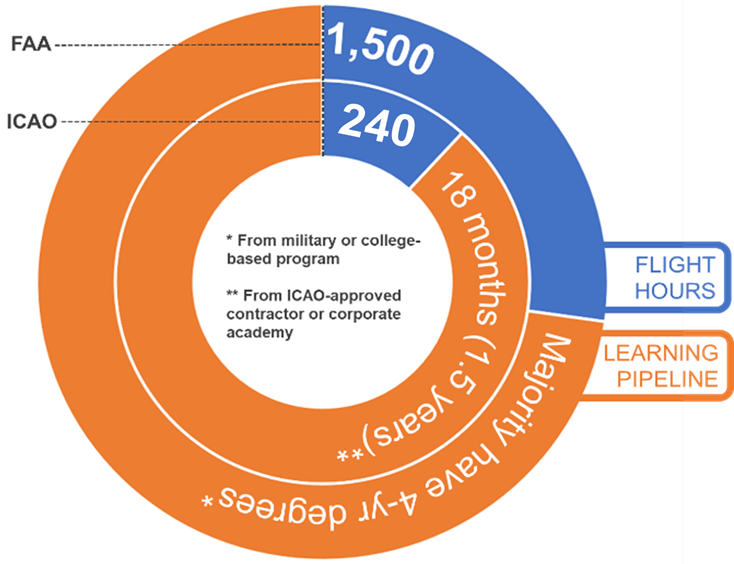

A practical example of a failure of learning policy was the tragic Boeing 737 MAX 8 crashes in 2018. Though the crashes were a result of design and mechanical issues, pilot error played a role. Since 2006, the International Commercial Aviation Organization systematically “streamlined” its learning program by focusing on “competency-based training” to meet increased demands. The result was checklist-dependent co-pilots competent with known tasks but unable to solve unanticipated problems. The crashes, unfortunately, validated the dangers of “automation dependency.” The International Commercial Aviation Organization model is over-systematized.

Figure 1: Informal comparison of International Commercial Aviation Organization versus Federal Aviation Administration co-pilot (first officer) certification standards in 2021.

Source: Image generated by the author.

In contrast, Federal Aviation Administration requirements prioritize training and education, producing co-pilots able to solve problems they had yet to encounter. The United States also flew the MAX 8 at this time. While International Commercial Aviation Organization first officers were taught to follow the tasks in the plane’s manual, Federal Aviation Administration pilots were taught to think through scenarios. The reality is, there is hardly any daylight between Air Force’s learning policy and the International Commercial Aviation Organization’s in that both create task-based, checklist-dependent workers. The International Commercial Aviation Organization arrived at its failed learning system by overvaluing “efficiency” with its competency-based training (I must point out, some Air Force initiatives are guilty of it as well). The Air Force arrived at its failed learning system by not updating its policy at the pace with which its intelligence missions evolved.

“We Need to Fix Training”

The Air Force should learn from the International Commercial Aviation Organization’s error by placing more value on education and less on training. Education is an investment that pays long-term dividends for the individual, the mission, the Air Force, and the nation.

Over my 15-year career in Air Force intelligence, I have frequently heard many colleagues say, “We need to fix training.” However, training is not the solution. Intelligence missions fail to “fix training” because, as an organization, they conflate training and education. This problem is exacerbated by the recent removal of doctrinal definitions of “training” and “education,” which were previously contained in Air Force Doctrine Vol II: Leadership, Appendix C: Education and Training:

Although interdependent, education and training are fundamentally distinct in application. Education prepares individuals for dynamic environments, while training is essential in developing skill sets. Education and training are complementary and will commonly overlap; however, recognition of the distinction between them is essential to the approach taken. Training approaches applied to educational situations will be less effective, as will educational approaches applied to situations in which training is more appropriate. (Air Force Doctrine Vol II: Leadership, p. 50)

Figure 2: Difference between Training and Education derived from Air Force Doctrine Vol II: Leadership, Appendix C: Education and Training, p. 50 to 53

As a teacher, curriculum developer, and analytic practitioner in the Air Force, these definitions are prudent and effective. In my experience, describing the specific learning modality being used helps students mentally orient themselves. If students are expecting the consistency and certainty of training while being educated, they are likely to become easily frustrated and confused, expecting clear answers, not interpretive lenses and conceptual frameworks. Likewise, if students expect the interpretive nature and debate-driven form of education, they over-complicate and over-analyze training, making the process unnecessarily cumbersome.

The complexity of learning for training is shallow. The complexity of learning for education is much deeper, the variance of beginning and ending more drastic, starting at greater depth and progressing toward shallow’s edge. Training’s relevance quickly atrophies. Training often does not relate beyond the topic at hand. Education teaches analysts to solve unknowns, to increase the quality of their judgement, and develop courses of action.

Systemic Bias in Air Force Policy

The root cause of the Air Force’s misaligned learning system is Air Force policy itself. In highlighting that the Air Force thinks all behaviors are built through training, I have often encountered this statement: “The policy isn’t wrong, it’s how people interpret the policy that is wrong.” To test this claim, I conducted content analysis of Air Force Handbook 36-2235 Volumes 1-13, which details the Air Force’s Instructional System Design. From the 2,450 pages of text, I enumerated 71 words related to “training” and “education” creating a dataset of approximately 18,200 words (note: all footnotes, endnotes, and reference citations were scrubbed prior to analysis). The result was a ratio of 18:1 “training” words versus “education” words, which increased to 35:1 if I removed Volume 10, Information for Designers of Instructional Systems Application to Education, in which the only examples are for the officer corps (the word “enlisted” does not appear once). My analysis revealed systemic bias to disproportionately focus on building training, not education. Training is the baked-in learning method, the primary solution. This indicates the policy is already biased. If that is the case, the argument that the policy has simply been misinterpreted holds little water. Furthermore, to argue that instructors and training units at the lowest echelons are bumbling, lazy or simply misinterpreting learning policy is insulting and wholly sidesteps leadership responsibility.

This bias is apparent not only in content analysis of Air Force policy but also in the ways the Air Force conceives of its jobs. Below is an example of a “job breakdown” according to Air Force Handbook 36-2235 Vol 9, Information for Designers of Instructional Systems Application To Technical Training. This model describes each level as bricks in a two-dimensional pyramid, down to the activities (subtasks) as a binary, yes/no behaviors — in essence, a checklist.

Figure 3: “Job Breakdown” model AFH 36-2235 Vol 9, pg 68

When applied to an intelligence analyst, this model fundamentally distorts the job. There is relatively small distortion when it comes to using tools, definitions, software suites, report formatting standards, etc. But there is massive distortion when applied to behaviors such as critical thinking, argumentation, and other sub-behaviors of analysis. This model is remarkably ineffective at conceptualizing what an analyst actually is and therefore what analysis is as well. So what is an analyst? Is it merely the dictionary definition of “a person who analyzes”? This is hardly useful. To create appropriate, effective job analysis for intelligence missions’ instructional system design, the Air Force should adopt a useful way to conceive of an “analyst” and therefore of “analysis” as well.

Context-Driven Learning Determinants

An Air Force intelligence analyst is not a single job. In fact, it is a knowledge worker at the intersection of three roles: advocate, skeptic, researcher (see Figure 4). By considering an analyst as the intersection of these roles, one may better understand how analysts embody the traits of each during their mission. The skeptic doubts the truth or value of both intrinsic (held by themselves) or extrinsic (held by an external person or group) beliefs and requires both intrinsic and extrinsic beliefs be held at risk until validated by evidence. The advocate relies on cogent argumentation to defend or maintain a theory, proposal, or policy and is proactive, advancing their own requirements and supporting the requirements of others.

Finally, the researcher is the core role and the foundation of an intelligence analyst. A researcher is curious, studious, and finds information to clarify unknowns, pulling from multiple resources and knowledge domains.

Figure 4: Anatomy of an Analyst

Source: Image generated by the author.

An analyst must strengthen each of these roles and endeavor to recognize situational or foundational imbalances. Each role serves to strengthen the other two and in turn develop sub-roles that enhance an analysts’ precision and flexibility.

Next, we must better conceive of what is actually happening during analysis. For my purposes, what makes good analysis is not of concern, and is well–trod territory. Instead, I offer a model to consider the concurrent, convergent process of simultaneous ingestion and evaluation, receiving information while comparing it to previous beliefs and assumptions. Analysis can be linear or non-linear; constrained or expansive; diagnostic or predictive. In the deconstruction below (see Figure 5), “knower” is the core component. “Knowing” is the active ingestion of data and information, while “known” is data and information already categorized, labeled, or otherwise contextualized.

Figure 5: Analysis Deconstructed

Image: Source generated by the author.

The analyst is the knower. In this way, the analyst is the sum of all their experiences, biases, intentions, and motivations. Therefore, the volume, diversity, and quality of knowledge and experiences an analyst gains directly impacts the quality of their analysis.

If you will recall the hapless, junior analysts from the introduction, their limitation was lacking a basic understanding of air weapons systems. They lacked a contextual understanding of what they were tasked to analyze. Think of context as the wheel that scopes the aperture of analysis. If the aperture is too wide, too much information enters the analytic process. All that extra information makes it difficult to analyze with precision. My coworkers did not know which information to keep out of the analytic process, so it seemed reasonable to assume a bomber might use rockets while conducting bombing training. They knew the definitions of these things (bomber, rocket, training, etc.), but not how they existed in practice. Had their aperture been smaller, their analysis would have been more focused.

If context is the wheel that scopes the aperture of analysis, then context-driven learning determinants are a useful methodology to isolate learning needs. Contextualized knowledge allows intelligence analysts to fill in the blanks. Now let’s consider another fill-in-the-blank exercise and how Air Force intelligence missions and intelligence analysts may develop context-driven learning determinants:

Two fighter jets are committed in a high-aspect beyond visual range engagement. Fighter 1 is carrying standard AIM-9 and Fighter 2 is loaded with AIM-7M. At 25 nautical miles of range, Fighter _______ takes a shot.

When I have posed this question to several of my analytic colleagues, they tend to lean on whichever intelligence discipline in which they were trained. A geospatial analyst may offer comparing before and after images of aircraft on a flight line (so many things would need to go “right” for that level of fidelity). An electronic analyst may suggest to deep-dive into radar characteristics (an extremely time- and work-intensive endeavor under the best circumstances). For every type of intelligence discipline there are as many technical answers. And they are all wrong. None of these behaviors adjust the aperture of analysis.

The solution is not a technical one — it is a research problem. What is high-aspect? What is beyond visual range? What is the difference between the AIM-9 and AIM-7M missiles? These are the questions which, when answered, reveal that Fighter 2 took the shot. By referencing credible documents or questioning a credible source, like a fighter pilot, the analyst would learn “high-aspect” means the two fighters are flying toward each other; “beyond visual range” means the pilots are relying on internal or outside instruments such as radar to know the other’s location; and finally, AIM-7M is a semi-active radar missile, while the AIM-9 is an infrared missile. Infrared missiles home in on the hottest part of the jet, generally the engines, while a semi-active radar missile is guided onto the target by the firing jet’s on-board radar. Therefore, the AIM-7M missile carried by Fighter 2 is the only missile that could have launched given their relative positions and orientation.

When Air Force intelligence missions look at their own sets of reoccurring “fill-in-the-blanks,” they can construct context-driven learning determinants based on knowledge gaps. The blanks are the actual and practical “blanks” a mission cannot assume a lay person to know. The benefit of this process is that learning organically becomes a progressive endeavor over time, building upon itself and inherently structured to encourage proactive learning.

The Roots of Revolutionary Learning

The Air Force faces an increasingly contested military and geopolitical environment. Chief of Staff of the Air Force Gen. Charles Q. Brown Jr.’s admonition that “victory goes to the rapid integrator of ideas” means Air Force leadership should take a realistic, honest survey of its systems and policies. If Air Force intelligence provides the service with decision advantage, then enhancing the quality and capability of its learning ecosystems is essential.

The Air Force should institutionalize functional definitions of training and education. The vocabulary of learning used by airman — at all levels — directly impacts their ability to conceptualize what learning is for their job and the Air Force at large. The service should cease overly emphasizing the prefix of intelligence jobs (e.g., signals analyst, geospatial analyst, etc.) and instead invest in supporting the core knowledge worker: the analyst. It can do so by ensuring that instructional system designers, missions, supervisors, learning facilitators, and individual analysts focus on the three roles of an analyst (advocate, skeptic, and researcher) and within each develop core behaviors and competencies. Finally, Air Force intelligence should develop context-driven learning determinants. The goal of learning should be to imbue knowledge workers (analysts) with cognitive behaviors, contextualized knowledge, and technical competencies to deploy against evolving mission realities and target analysis

New Air Force intelligence analysts are currently assigned missions they are wholly ill-equipped to solve. Tailoring the service’s learning policy and incorporating the ideas and theories presented here could provide the backbone and continuity for intelligence analysts to support new approaches to defense strategies and ensure future Air Force victories.

Jonathan “JUGGLES” Freeman, Tech Sgt., U.S. Air Force, is currently a senior analyst and senior instructor for the National Cryptologic School-Pacific, where he leads curriculum development for the College of Language and Area Studies and technical direction for the schoolhouse. JUGGLES was awarded Adjunct Faculty of the Year for the National Security Agency in 2019, Middle Enlisted Performer of the Year for the National Security Agency-Hawaii 2020, and the Director of National Intelligence’s Intelligence Community Trainer of the Year for 2020.

Disclaimer: The views expressed in this article are solely the opinion of the author unless otherwise noted or sourced. These views in no way represent the views of the United States Air Force, Department of Defense, National Security Agency, or the U.S. Government. The ideas, theories, assessments expressed here are based on the author’s studies, insights, experience, and interviews with experts in their areas of specialty. Research and analysis is the product of the author unless otherwise noted or sourced.

Image: U.S. Air Force (Photo by Master Sgt. Donald R. Allen)