Editor’s Note: This article was submitted in response to the call for ideas issued by the co-chairs of the National Security Commission on Artificial Intelligence, Eric Schmidt and Robert Work. It addresses the first question (part a.) on how artificial intelligence might affect the character and/or the nature of war.

In a world of networked sensors and artificial intelligence (AI), optimists have proclaimed that battlefield situational awareness is about to reach unprecedented highs. Don’t believe it. Clausewitz’s “fog of war” is here to stay, and AI may make it worse.

Consider a cautionary tale: Near the end of the 20th century, prominent U.S. defense leaders talked about a revolution in military affairs. “Network-centric warfare” promised to connect a “system of systems” together to provide unprecedented levels of information sharing. It failed to live up to its promise. As one commentator noted, the “fog of war” had been replaced by a “fog of systems.”

For four reasons, the proliferation of battlefield AI and autonomous systems is likely to increase the fog of war. First, new technologies tend to make life harder for individuals, even as they add capability. Second, AI introduces new kinds of battlefield cognition. Third, combining AI, hypersonic weapons, and directed energy will accelerate decision-making to machine speeds. And fourth, AI enables military deception of both a new quality and quantity.

Mercifully, there is hope: The next generation of officers will be ideally suited to face these challenges.

New Technologies and Inflated Expectations

Many major technological changes decrease individuals’ subjective well-being, despite improving the power of society as a whole. Consider the agricultural revolution: Although each family could support more children than hunter-gatherers, historian Yuval Noah Harari argues that this came at the cost of uninteresting work, backbreaking labor, unbalanced nutrition, fragility to drought and widespread disease. Despite these drawbacks, societies that adopted agriculture rapidly eclipsed others, for the simple reason that in the aggregate, they had huge competitive advantages. Harari sees this trend repeat in every major technological revolution that followed: Societies grew stronger while demanding more from individuals.

If this example seems too archaic, consider the raw capability your smartphone has added to your life. With a device that fits in your pocket, you can choose any place you can think of and rapidly acquire satellite imagery, precise navigation, a detailed history, and an accurate weather forecast. Comparing the capabilities of an individual with a smartphone to a human from a century ago is no comparison at all. And yet, recent research suggests they’ve also made us dangerously distracted, and less able to separate work from home. While smartphones are technological marvels, when everyone has one, they raise the expectations that society places on individuals. What used to be an incredible capability (instant communication) now becomes an expectation to always be available.

Now, consider the changing experience of fighter pilots: Flying fighter aircraft used to be an all-consuming task. As the aircraft became easier to fly, operating additional sensors (radar) and weapons (missiles) added to the cognitive load. As these sensor interfaces became more intuitive, systems became more complex (guided weapons, stealth and electronic attack). At each stage, expectations for individual performance increased even as technological improvements made previously difficult tasks more manageable.

This trend points to why AI will probably not reduce the fog of war as experienced by the average individual combatant. While access to AI will help an individual navigate numerous sensory and informational inputs, the military at large may simply demand more of that individual. Although not every part of the military will be affected equally by AI and autonomy, resource-constrained militaries will shift resources to where they’re needed and cut them where not. For instance, as technology has improved, bomber-type aircraft that used to require nearly a dozen crew now require only two.

A New Kind of Battlefield Cognition

Neuroscience and psychology have shed light on how different personalities perceive the world differently. Good leaders understand this, exploiting this variety of viewpoints to shape a more accurate picture; great combat leaders do so while in mortal peril. Combat leaders learn how to “hear” the picture another warfighter is communicating, able to parse information from the battlefield ambiguity.

This is complicated enough as it stands. Imagine throwing a fundamentally different kind of cognition into the mix. With general AI (approaching or exceeding human cognitive capability) or advanced, networked, adversarial, narrow AI, this may become a real concern. It’s no exaggeration to say that AI perceives the world differently than a human, particularly when it comes to discerning human intentions. As toddlers, humans develop a “theory of mind.” When we see another human take an action or make a statement that isn’t fully clear, we postulate that another mind like our own took that action, and we extrapolate why a mind like ours might have done it. This fact seems purely academic, until you understand that AI doesn’t view a human decision the same way. If trying to deduce a person’s intentions, neural networks would look through the lens of statistical correlations, not a theory of the mind. In other words, rather than asking, “Why would another thinking being have taken that action?” AI will incorporate all inputs and make an inference using pattern recognition derived from data sets.

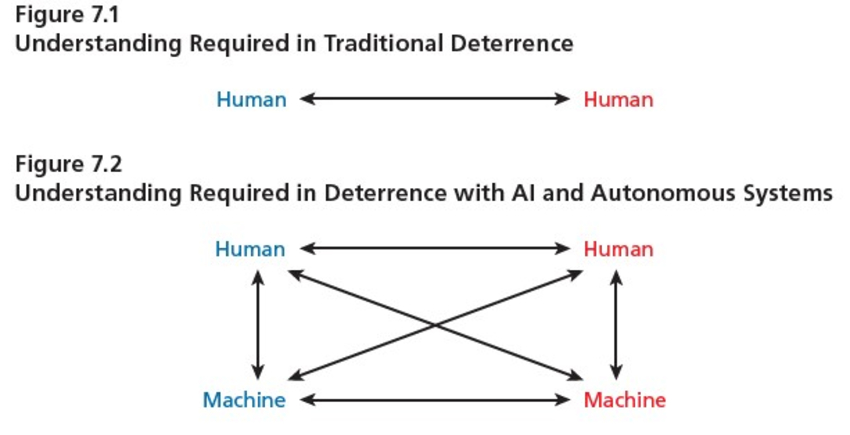

Consider a RAND Corporation wargame examining how thinking machines affect deterrence. In this report, moves made by one side that both human players perceived as de-escalatory were immediately perceived by AI as a threat. When a human player withdrew forces to de-escalate, machines were likely to perceive a tactical advantage to be pursued; when a human player moved forces forward in an obvious (but not hostile) show of determination, machines tended to perceive an imminent threat and engaged. The report found that humans had to contend with confusion not only over what the adversary human was thinking, but with the adversary AI’s perception as well. Furthermore, players had to contend with how their own AI might misinterpret human intentions (whether friendly or enemy). A graphical depiction from the report illustrates just how much this complicates deterrence:

Source: Deterrence in the Age of Thinking Machines (RAND – used with permission).

Some engineers might protest that these problems can be solved by improving algorithms over time. Realistically, it will not be possible to completely remove the unpredictability of how these machines will react to novel situations. AI requires either predetermined rules or large data sets for machine learning, neither of which bode well for novel situations (e.g., war). While “no good plan survives contact with the enemy,” the speed with which machines can make these decisions magnifies the consequences of such ambiguities. As one report investigated, a single machine-learning system is a “black box,” with an inherent amount of unpredictability — but multiple, networked systems have compounding uncertainties that give rise to emergent unpredictability. This problem is exacerbated by interactions with foreign (or adversary) machines. In an effort to combat such phenomena, some have called for AI decision-making to be made more transparent to mitigate these concerns. However, the feasibility of full AI transparency has been called into question.

Fog and Friction at Machine Speed

As technology progresses, the speed of war increases. Consider hypersonic weapons, which have the capability to move so fast that from the time of detection to target impact, few meaningful defensive measures are available. One way to counter them is with an even faster weapon: directed energy. However, the timelines for these kinds of decision cycles are dangerously short, such that human operators will face tremendous pressure for hasty decisions even when given ambiguous warnings. Given multiple examples of fratricide from U.S. Army Patriot batteries in 2003 and the infamous USS Vincennes incident (both described in detail in Paul Scharre’s book Army of None), one sees how shorter decision cycles may result in unintended engagements.

To cope with even shorter decision cycles caused by hypersonic weapons (such as the ones being developed by the United States, Russia, or China), one way to reduce reaction times is by incorporating autonomy or AI. However, this trend raises questions about accountability, authority, and how to mitigate unwanted casualties. To address these concerns, current Defense Department policy mandates a “human in the loop” for offensive lethal decisions, with “human on the loop” autonomy only permitted for defense of manned sites. Ignoring for a moment the ease with which terms like “offense” and “defense” can be muddied, it’s worth considering how mandating types of autonomous engagement authority may not really serve as mitigation.

To understand why mandating a “human in the loop” isn’t a decisive check on AI, consider the kinds of pressures combatants may be under in a war fought at machine speeds. If faced with overwhelming numbers of opponents (e.g., swarms) or attackers moving at hypersonic speeds, the shortened reaction times required for survivability may make any human approval a foregone conclusion. Social psychology literature already suggests that, under time pressure, humans resort to “automatic reasoning” as a shortcut, reducing the likelihood of deliberative reasoning before approving a machine’s recommendation.

The less restrictive option of “human on the loop” engagement authority presents a different problem: “automation bias,” wherein humans place excessive trust in automated technology. This is a sadly well-documented phenomenon in aircraft mishaps and has recently emerged in the public consciousness after self-driving car accidents in which the human “driver” failed to intervene in time.

Thus, whatever policy the Pentagon directs for engagement authority, the increasing pace of warfare may see human decision-makers increasingly marginalized as a practical necessity. China seems to be actually planning for this moment in military affairs. One influential Chinese publication famously postulated the “singularity” of future warfare: the point at which decisions to act and react were made so rapidly by machines that humans could make no meaningful contributions and were thus no longer relevant to immediate decision-making.

The overall picture of the increasing pace of war (both from faster weapons and faster machine decision-making) may ultimately see humans sidelined from many decisions. Once on the margins, the automation bias will make those humans less intellectually engaged in activities.

In sum, far from providing near-total situational awareness, AI and automation will make the fog of war much worse for warfighters.

Deception at Machine Speed

Just as the battlefields of the near future may have two kinds of cognition — human and AI — they may also face multiple kinds of deception. Russia has already proven adept at misinformation campaigns, such as when it created ambiguity surrounding its military operations in Ukraine or when it wreaked havoc on American politics in the 2016 presidential election. Malicious actors in the near future may use AI to conduct such deceptions with greater sophistication, at greater scale and for a lower cost.

More insidiously, AI itself may be a target for deception. Machine-learning algorithms have been famously duped by clever exploitation of weaknesses in pattern recognition. For instance, one team used a few inconspicuous strips of tape to trick a driverless car into mistaking a stop sign for a “45 MPH” sign. More worryingly, with a few daubs of paint, engineers tricked an algorithm into consistently identifying a small turtle as a “rifle.” It is probable that a future opponent will use such techniques to conceal targets or (most worryingly) make nonhostile entities appear as valid targets. Although better data sets, testing, and engineering will likely mitigate some of these issues, it will not be possible to eliminate them all.

Thus, AI complicates deception by increasing the interactions in which deception can occur (machine-to-machine, machine-to-human, human-to-human). In fact, AI may enable technologies for misdirection to more consistently succeed, enabling deception of a new quantity.

Preparing the Next Generation

How to prepare the next generation of warfighters? Here, at last, is some good news: These future military leaders are already preparing themselves. While the mismatch in the numbers of science, technology, engineering and math (STEM) college graduates versus industry requirements is much maligned, the reality is that “Generation Z” will have more exposure to widespread AI and autonomy than past generations. These young people have grown up in an era of fake news, deepfakes and misuse of data by corporations they believed they could trust. Many teens are adept at navigating multiple “selves” on different social media sites, and parents are increasingly aware of the need to discuss online privacy concerns with their children. Tools are even being developed to teach young children about data and AI. From these formative experiences, the youngest generation will leave high school and college far more aware of fundamental issues surrounding technology, data, and AI.

As evidence, a recent Princeton study found that when it came to detecting maliciously generated fake news articles, youth was the most significant factor. The older the sample population, the worse they fared at spotting fakes; factors like education, political orientation, and intellect didn’t matter. This remarkable result demonstrates that the younger generation will probably be better at navigating the ambiguities of the AI era than older ones. When members of this generation begin to enter the military, they will bring this savvy with them.

However, older generations of officers can still provide mentorship, largely by helping them find the balance between emerging technologies and more traditional capabilities. As an anecdotal example, simulator instructors in A-10C units (most of whom are retired A-10 pilots) regularly remark on how quickly young pilots pick up the newest technology integrated on the venerable jet. However, young pilots often lack the maturity to know when new tools are either ill-suited to a problem or distracting. Older pilots disparagingly label high-tech features as “face magnets” for their ability to distract.

Although anecdotal, the above example provides a useful analogy. Young officers will benefit from an ongoing discussion of how to recognize scenarios where AI or autonomous systems are likely to be confused or to make decisions too hastily. In training, more experienced officers should balance scenarios that allow for “full-up” implementation of high-tech tools, as well as scenarios that force insidious degradation or deception of thinking machines.

Mentors can also guide mentees toward education. Toward AI fundamentals, supervisors might free up time for subordinates to take advantage of the growing trend of open-source micro-credentials. Senior leaders (in addition to the pressing need to ensure their own AI proficiency) could even consider incorporating such courses or open-source classes (from places like edX, Coursera, MIT open courseware or Khan Academy) onto their annual reading lists. For broader perspective, leaders can encourage subordinates to seek books that examine cognitive processes (Daniel Kahneman’s Thinking, Fast and Slow) or statistically based reasoning (Hans Rosling’s Factfulness).

Preparing for the Era of Thinking Machines

Fog and friction will likely be as prevalent in the era of thinking machines as at any other time in history. The U.S. military should view with great skepticism any optimistic claims that new technology will remove the fog of war. Instead, by taking a more realistic view that fog and friction are here to stay, the U.S. military can focus on training its leaders, present and future, to navigate the increasing complexity and dynamism of a battlefield operating at machine speeds. These transitions are difficult, but they have been successfully navigated before. By investing in future leaders and their education, the United States can do so again.

Zach Hughes is a U.S. Air Force senior pilot with over 2300 hours in the A-10C, including 1100 combat hours in Afghanistan, Syria, and Iraq. He currently researches AI and defense policy at Georgetown University. The views expressed are those of the author and do not reflect the official policy or position of the U.S. Air Force, Department of Defense or the U.S. government.

Image: U.S. Marine Corps (Photos by Lance Cpl. Shane T. Manson)